Data Sovereignty or Cloud Convenience?

Practical lens for business

I was asked to research the differences between running an AI Large Language Model locally and using premium cloud-based services, focusing on T&Cs, and coming up with some practical suggestions for business users.

When I started down this road I started by asking ChatGPT to do some deep research to help me make a frame for my research. However, this put me on a path to some surprising and concerning discoveries. During this research we (GPT and me) only just started to scratch the surface of the implications.

The short and sweet of it

Local AI On-Premise

Definition: The organisation runs the model on its own hardware, keeps prompts and outputs internal, no internet dependency.

- Complete control of data: No third-party access, no reliance on provider T&Cs

- Air-gapped operation capability: Can be set up to run even without internet connectivity

- Compliance advantage: No risk of breaching data sovereignty rules.

Trade-offs: AI may lag behind the latest cloud-only models. It requires you to manage updates, optimisation, integration and maintenance yourself, offers no vendor SLA (unless you set one up yourself). Performance is based on your infrastructure choices (GPUs, storage, etc.).

Cloud Provider-Hosted AI

Prompts and data go off premise, to a third-party provider’s servers (e.g. OpenAI, Google, Microsoft).

- Incredibly easy to start using, just login and start typing giving you instant access to the latest models without having to maintain your own infrastructure.

- Managed by cloud providers who provide continuous updates and easy integration

Trade-offs: Almost zero control of your data, which always leaves your environment, is most often processed and stored overseas. Bound by provider T&Cs (e.g. OpenAI, Google, Microsoft) and subject to foreign legal regimes (e.g. U.S. CLOUD Act, Patriot Act, conflicts with GDPR etc.). Sensitive information must be very carefully handled to avoid compliance or contractual breaches.

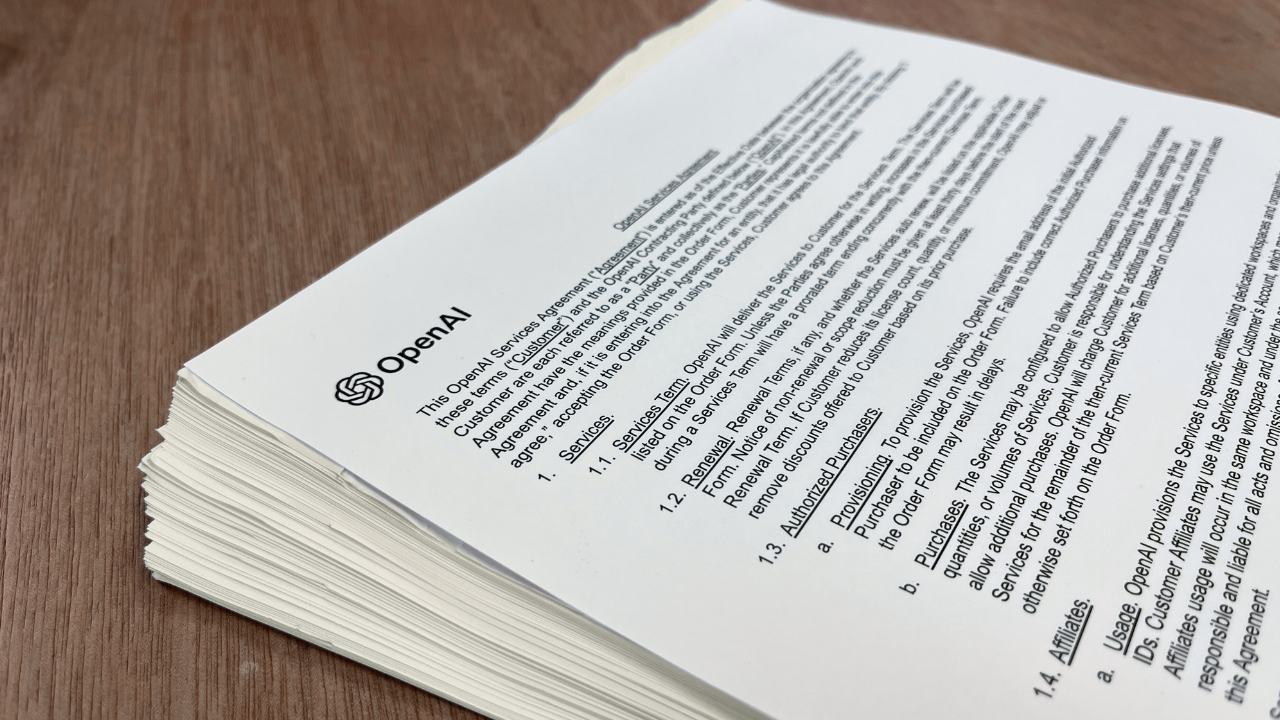

Take a closer look at those T&Cs

Non-Negotiable Contracts

For most businesses, cloud AI providers offer non-negotiable ‘take-it-or-leave-it’ contracts. These standard form agreements set terms such as governing law, liability limits, and data usage. Only very large enterprises typically have the leverage to negotiate bespoke arrangements. These are often long and wordy. Further, studies have found that customers usually accept these lengthy contracts without negotiation or full awareness of the terms. While this study is in Europe, others show a similar behaviour to customers across the globe. As a result, providers can impose arbitrary conditions that favour the provider – for example, many contracts disclaim the provider’s responsibility in cases of data loss or breaches. This leaves businesses bearing the risk if something goes wrong, with little recourse under the contract.

"Most users do not actually read these voluminous T&Cs - one study found 91% of people consent without reading, and some popular apps’ terms would take 17 hours to read in full - so businesses need to be extra vigilant to understand what rights they might be signing away."

Data Beyond Borders – Australian Data Stored in Non-Australian Cloud Environments - Australian Cyber Security Magazine

Data Ownership and Usage Rights

The fine print in terms and conditions can undermine a customer’s ownership of their data. By agreeing, users often grant the provider broad licenses to use, store, or even share their content. In practice, this means a business’s data (or even intellectual property) given to a cloud AI service might be used by the provider for purposes like improving its AI models or services. For instance, some generative AI platforms state that user inputs can be used to train AI models unless the user opts out or uses a premium plan. Such clauses create an opaque landscape of ownership rights, making it hard for businesses to retain control over sensitive information. In some cases, the T&Cs even stipulate that any legal disputes must be handled in the provider’s home country, discouraging or complicating challenges by foreign customers.

"You give Google (and those we work with)a worldwide license to use,

host, store, reproduce, modify, create derivative works (such as those resulting from translations, adaptations or other changes we

make so that your content works better with our Services), communicate, publish, publicly perform, publicly display and distribute such

content.”

Google

Terms of Service – Privacy & Terms – Google

This is a very broad license.

Even though Google says you retain IP ownership, the rights you grant are so expansive that effective control is diluted.

“Microsoft may transfer Customer Data to, and store and process it in, the United States

or any other country

in which Microsoft or its affiliates or subcontractors maintain facilities.”

Microsoft

Online Services Terms

Explicitly states data may be moved across borders for storage and processing.

“OpenAI may use Content to provide and maintain the Services, comply with law, and enforce our policies.”

Terms

of use | OpenAI

More limited than Google’s, but still a contractual right to use customer inputs and outputs, which could include sensitive or proprietary data. "to provide and maintain the service" could mean any part of their business including third-parties who provide services to them, and of course those third parties have their own third-parties who provide services to that party, and so on...

Liability Limitations and Security

Cloud AI contracts typically limit the provider’s liability for outages or security incidents. Providers may cap their

responsibility or damages, regardless of the impact on the customer’s business. As noted in one survey of cloud contracts, customers

are legally bound by terms that heavily limit what they can claim if data is destroyed or exposed. Once accepted, these clauses

are legally binding, even if they seem unreasonable from a business risk standpoint. If customer data is destroyed, exposed, or

corrupted, recovery is capped at trivial amounts (often just recent fees).

Uncertainty of Data Location and Jurisdiction

Cloud AI services often replicate and move data across global data centres for efficiency.

Businesses rarely know where their data is physically stored at any given time. For example, a business might upload data

in Australia, but the provider’s terms might allow that data to be processed or backed up in the US or elsewhere.

This lack of control over data location can lead to compliance headaches – companies might inadvertently violate data residency rules

or face foreign legal exposure without realising it, leading to compliance headaches.

For Australian businesses, it creates risk under Australia’s Privacy Act 1988 (Schedule 1, Australian Privacy

Principles) of the Privacy Act (cross-border disclosure obligations). If an Australian company uses a U.S. or EU cloud

provider, they remain liable under Australian law if that provider mishandles the data.

Do you know where yours or your clients data is at any given time?

What does it mean to be subject to foreign laws?

When businesses rely on cloud AI providers based in other countries, they must contend with laws that reach across borders. Extraterritorial Reach of U.S. Laws: Many leading AI cloud providers are subject to United States law, which can apply globally. Under the U.S. CLOUD Act (2018), American authorities can compel U.S.-based providers (like those hosting AI platforms) to hand over data regardless of where the data is physically stored . For an Australian company using a U.S. cloud AI service, this means data kept on servers in Sydney or Singapore could still be produced to U.S. law enforcement via the provider. This extraterritorial reach poses obvious sovereignty and privacy concerns – essentially, foreign (U.S.) government agencies could access Australian business or customer data without going through Australian courts.

Australian companies can be forced into a no-win situation

An Australian company using a U.S. cloud AI service can be forced into a no-win situation – either obey a U.S. data demand and risk breaching APP 8, or comply with Australian law and defy U.S. orders.

Under Australian Privacy Principle 8 under the Privacy Act 1988, an organisation that sends personal information overseas must “take reasonable steps to ensure that the overseas recipient does not breach the APPs in relation to the information” (OAIC).

VS

U.S. law: The CLOUD Act (2018) allows U.S. authorities to compel providers to hand over data “regardless of whether such

communication… is located within or outside of the United States” (Congress.gov).

This is not hypothetical

Example 1: EU GDPR vs U.S. CLOUD Act: The IAPP notes, “The CLOUD Act creates a direct conflict of laws … while U.S. providers must comply with U.S. orders, they may at the same time be prohibited under EU law from disclosing the data” (IAPP).

Example 2: Schrems II Case (2020): The Court of Justice of the EU struck down the EU–US Privacy Shield because U.S. surveillance laws were judged too intrusive for EU citizens’ data (CJEU Judgment C-311/18).

When using global AI providers, businesses enter a legal minefield where it isn’t clear which law prevails, and individual firms cannot resolve these conflicts on their own. The Australian Cyber Security Magazine warns: “Foreign legislation’s extraterritorial reach, notably the US CLOUD Act, poses significant challenges to Australian data sovereignty.”

Advice for Australian Companies

- Read the fine print: Cloud AI contracts cap liability, grant broad data-use rights, and lock disputes to U.S. courts.

-

Know your obligations: Under Australia’s Privacy Act 1988, you remain responsible if overseas providers mishandle personal

data.

- Expect conflicts: U.S. CLOUD Act orders can clash with Australian privacy law, leaving you exposed either way.

-

Local to Australia isn’t absolute: Even “onshore” storage may still be accessed under foreign laws or alliances like Five Eyes.

- Be proactive: Use enterprise tiers that exclude training, encrypt data with your own keys, and set clear internal rules on what staff can and can’t upload

Traffic Light System with a handy decision tool

| Traffic Light |

Type of Data |

Decision tool |

|

🟢Green (Safe) |

|

Ask yourself:

If yes = safe to use. |

|

🟡Amber (Caution) |

|

If yes = proceed carefully, otherwise treat it as 🔴 Red. |

|

🔴Red (Stop) |

|

|

The Limits of Traffic Lights and Policies

Internal rules reduce exposure, but they don’t remove the problem. The combination of human error, convenience pressure, and unclear AI boundaries means companies remain at risk of leaks, compliance breaches, and reputational damage.

- Time sink: Staff trying to anonymise contracts, emails, or reports before pasting them into ChatGPT often find it takes longer than just doing the task manually

- Human error: Anonymisation is imperfect. Research shows around 30–60% of employees admit to entering sensitive or high-risk data into generative AI tools anyway, even when their workplace policies forbid it

- Pressure to deliver: Under time pressure, workers cut corners. In one global survey, 4 in 10 employees said they would knowingly violate AI rules if it helped them get work done faster

- False safety: Even when redacted, data can contain context clues that identify clients, projects, or individuals. Regulators in Australia have already investigated cases where anonymised data fed into AI still amounted to a privacy breach

Benefits of Having AI On-Prem

Most companies today rely on AI in the cloud. But what if you could run the model entirely on your own servers, with zero data ever leaving your environment?

- Full control over data: Everything stays on your own servers, meaning no third-party access, no surprise licensing clauses, and no risk of offshore disclosure.

- Offline by design: Models can run air-gapped, completely disconnected from the internet, so sensitive work never leaves your environment.

- Regulatory certainty: APP 8 compliance is simpler when data never crosses borders. You avoid the clash between Australian privacy law and foreign orders like the U.S. CLOUD Act.

- Customisable to your needs: Retention rules, fine-tuning, and integrations can all be managed to suit the business, rather than whatever defaults a cloud provider offers.

- Small-scale still works: Even modest on-prem models can speed up document review, analysis, or drafting -without giving up sovereignty.

How Do You Decide? A Practical Lens for Choosing Cloud vs On-Prem AI

| Strategic Lens |

Is AI just a tool you rent, or a capability you own? Investing in local AI can be slower at first, but it builds a platform you control - one that scales with your business instead of someone else’s terms. |

|

Risk vs Reward |

Ask yourself: If a single employee slips and pastes sensitive data into a cloud AI, what’s the potential fallout - lost clients, regulator

action, or exposure of trade secrets? Is the risk to IP or clients data worth the convenience of not managing your own model? |

| Real-World Lessons |

Samsung banned ChatGPT after engineers leaked source code. Would your business survive a similar slip-up? |

|

Compliance Reality Check |

If a regulator asks how customer data ended up in a U.S. training set, what answer would you rather give: “Our staff made an error” or “We

never send sensitive data offshore in the first place”? |

| Values and Control |

Whose rules do you want to live by? On-prem AI means your data, your rules. Cloud AI means their data, their rules. Which aligns better with

your obligations to clients, partners, and regulators? |

.png)